INDEX

-

WHY DOES YOUR MUSIC SOUNDS DIFFERENT?

-

THE CREATIVE PROCESS

-

LEVEL 1: CIRCLE OF CONFUSION

-

LEVEL 2: THE STUDIO PARADOX

-

LEVEL 3: YOUR BRAIN IS A LARGE EQ

-

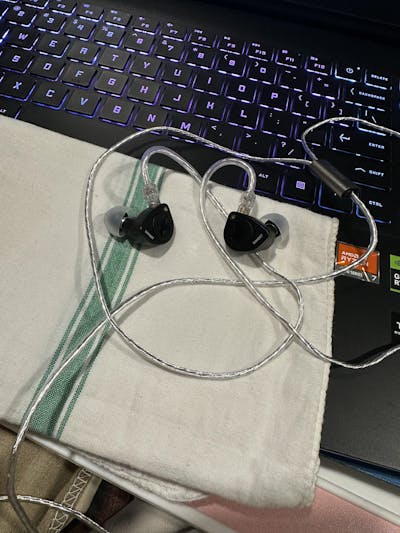

LEVEL 4: CONSUMER GEARS

-

LEVEL 5: NEW AGE MUSIC ACCESS

-

HOW CAN YOU FIX IT ALL?

-

WHAT WE KNOW, WHAT WE HAVE LEARNT?

-

IT’S NOT THAT DEEP

WHY DOES YOUR MUSIC SOUNDS DIFFERENT, EVERYWHERE?

Have you ever asked yourself why your favorite tracks sound good in your speaker system, yet it struggles with sound good in headphones. Or it might sound great in your small Bluetooth speakers, but sounds muffled in your Car. Or even worse, does it sound quite good in your cheap earphones yet sounds worse in your expensive IEMs? Something just doesn’t sound right. Well, it might not be your gear since our brains are a big EQ and it takes very few days to adjust to a new transducer and on the other hand, it’s not your gear that you bought after researching for so many days, all these people can’t be wrong right?

This issue is a part of a deeper problem in audio, that even seasoned professionals ignore in their listening sessions. Which leads them to bad judgement of the equipment’s result is a snowball effect that produces so much noise that it keeps us from choosing the right set of equipment for us.

Harman Researcher, the legendary Mr. Floyd Toole, acknowledged this problem. He named it “The Circle of Confusion”. After learning about this issue, you will notice pitfalls of the current Audiophile World. The way you view this hobby is going to be changed forever, for good or bad, who knows. But in the end, it would be very hard to fool you, and you can very confidently pass on your own judgment.

THE CREATIVE PROCESS

Let’s start with how music actually gets to your ears.

Imagine you went to a Live Jazz Club. The atmosphere is filled with smoke. The sax player is just a few feet away from you. The bass player is vibrating the wooden floor with the bass. The drums are crackling when there’s a big hit in the kick. The whole club is vibrating with music. Glasses, chandeliers, tables, everything reflecting sound in their own way. You can feel the room singing with the band. That’s called “Sound Production”. Real instruments without normalization, without any filter or correction, in real space creating an atmosphere.

SOUND REPRODUCTION

Now, take the same band with all of their instruments, and put them in a glass box. Place microphones at the sound source and give them tiny headphones to monitor what they are playing. Then a person listens to the playback in a different room, where the room has its own characteristics. Then the person adjusts the factors of the music that he/she thinks correctly. Then they do the coloration to each instrument as per their taste. The mix that he rendered at the end, it getting to your TWS/IEMs/Headphones which completely ignores the room.

So, what you are hearing is not the Jazz Club, it’s a polluted version filtered through equipment upon equipment, rooms upon rooms, another person’s ear, taste, your gear signature. And that’s the issue of it. From “PRODUCTION” to “REPRODUCTION”, music undergoes through a lot of translation process and each of the process adds it’s color, flavor and accent to the sound. And that’s the requirement of sound reproduction. The mic might sound great to the audio engineer, the mixing room sounds great to the producer, the mastering room sounds great to the mixing engineer. Everyone might be best in their own way, but it’s not a universal truth.

LEVEL 1: CIRCLE OF CONFUSION

Toole’s concept is simple yet devastating for Audiophiles.

Music is mixed on flawed monitors > Consumer judge gears based on how it plays those imperfect mixes > Audio Equipment manufacturers design gear to make those mixes sound good. The loop keeps repeating and repeating, with no fixed reference or standard about “WHAT ACCURATE REALLY MEANS?”

It’s like editing a photo using a monitor that is too warm/yellow. Then making phone screens too yellow to make the “blue pictures” (After Color Correction in the Warm Monitor) look natural. At the end, when you see the picture in a good monitor, it will be washed blue. It’s always have been like this in audio. We are constantly just hiding flaws with more flaws.

THE CLASSIC - YAMAHA NS10

For example, Yamaha NS-10M was very popular among music producers because of their capabilities to show flaws in the music. But inherently the speakers were bright in the mid-range with sharp treble and bass cut. Which resulted in an era of music that is too mid-scooped with very high bass and treble, that sounds peaky to newer systems. (Not quite sure but – Nirvana used NS10 heavily to produce their music, thus their music suffers from intelligibility in modern systems, so you need a tonality like NS10 to get a good performance of something like “Nevermind” Album).

LEVEL 2: STUDIO CALIBRATION PARADOX

Why even professionals & million-dollar studios struggle too?

Here is the thing. Suppose you calibrated your speakers to absolute perfection; to hear the “Real Truth”, it should solve the problem, right? As easy as it gets. Well, that’s where the confusion even deepens. Even top-tier studios struggle with the same problem, despite following every standard in the book.

To start things off, professional studios often use very popular standards. Like 1. LEDE (Live End Dead End) 2. SMPTE (Society of Motion Picture and Television Engineering) 3. Dolby ATMOS Home Entertainment Guidelines Yet, all these treated / trusted standards backed by expensive cutting-edge gears cannot solve the problem. But why?

(Again, most studios are not standardised)

1. Rooms aren’t standardised. Suppose one studio spends thousands on treatment, bass traps, calibrated monitors, DSP Correction. But geometry, material and reflections differ. A mix that sounds perfect in one room, mat tilts in another. And there’s also a standardisation problem in calibration too. Calibration doesn’t equal universality; this is where even the problem of calibrated rooms starts.

2. Acoustic Geometry is Still Unique. A mix room in India with wood floors and drop ceilings won’t sound like a LA room with concrete walls and angled ceilings. Each studio is different. Calibration corrects frequency response at a point, but not the whole spatial experience can be replicated everywhere.

(I wanted to discuss Impulse Response, Complex Signal Transformation, BRIR to tell you how Reflection is a Part of Frequency Response as it takes both amplitude and time domain in FFT via Farina Method, but I am reserving this for future articles.)

3. Frequency Response Alterations – Reflections are a big problem to Studios in general, they create comb filtering effect which distorts the sound in such a way that gets very hard for a mixing engineer to not get misled. It causes incorrect EQ decisions during the mixing period. Due to peaks and dips in frequency responses due to constructive and destructive interference of phase alterations, it effects mastering engineer’s perception of spectral balance. Which can also mask a lot of ranges that leads to unnecessary compensatory adjustments.

4. Calibration Targets Vary - Each studio has its own idea of what sounds "right." Some want a completely dead room with zero reflections. Others want a bit of liveliness. Some aim for perfectly flat response (which can be bright), where others prefer a gentle downward slope. Every engineer swears their approach is best, but if they're all "best," then who’s, right? Even in a perfect room, mix decisions are made in context of speaker playback, which includes natural crossfeed, room interaction which you bypass most of the time in personal listening gears. Translation isn't perfection. The goal of mixing is to translate not replicate, even if they want to achieve replication it will be some form of translation. If it sounds good everywhere, it's successful, even if it doesn't sound identical. No matter it is following NARAS, or AES standard. (No need to go deep into standards as an Audiophile)

5. Music is not made for headphones - Music production is mostly a speaker focused environment in which all the big decisions from mic placement to ultimate mastering - are speaker playback with room acoustics. Engineers "earn their ears" on speakers, not on headphones to make creative choices on speakers, and to optimize for speaker-based consumer playback.

IEMs and headphones are quality-control measures, which means they are used for testing how the speaker optimized mix sounds in an isolated situation. And even if some engineer makes something for earphones and speakers, mostly they tune it for low tonal quality grade equipment and not something Hi-FI. The economic and technological reality is cruel: The entire professional music production business is based upon speaker reproduction because that's where the business is and where the basic psychoacoustics function correctly. Headphones are receiving the aftereffect of decisions made for an entirely different acoustic paradigm.

LEVEL 3: YOUR BRAIN IS A LARGE EQ

Music is more than sound. It has an emotional attachment that makes us groove, it’s not just random noise. So technically we are not functioned to consume it like any other sound. “Psychoacoustics” is a field that explores the connection of acoustics with human brain. Which will be a major focus of this series. But at the end, there is a lot of things that goes into sensing SPL in eardrums to interpreting that in brain. If you heard about HRTF it’s good, I will explain in later sections, just remember that HRTF is not the only thing that decides good audio to you.

NOSTALGIA IS UNDERRATED

Here's what surprises me the most. Our brains are not only just passively receiving sound and translate to an understandable language, but it also actively interprets everything based on our history. Just answer this simple question, how much time would you spend if you had access to your school time Walkman with songs filled with SD card? along with the earbuds that costed less than peanuts? Days, right. You might be enjoying something more precious with that backdated hardware that you present hi-res gears can’t give you. At least, for me it is the case.

OUR BRAINS ARE DUMB OR SMART?

But when you are actively participating in this hobby as an adult, you need to take care of few things before you can understand your ears & brain. If you grew up with very bass heavy headphones, or thin sounding gaming headphones, or even Beats. Your neural pathways (Auditory Ventral Stream & Dorsal Stream) are adapted to that sound signature. You had no control over that. However, you can be informed. You are accustomed to bass heavy genres, so when you hear a clean, neutral sound, your brain goes “I need bass / This sounds broken / Where is the low-end?”. But fortunately, brains can retrain themselves. If you spend enough time with neutral sounding system, instead of chasing your history, you will suddenly hear music that is not masked, you will notice timbre that you never noticed, stereo imaging will become clear and slowly you will realize boomy bass on poorly designed headphones was actually masking a lot of the musical details.

TIMBRE…TIMBRE…TIMBRE…

There is a really interesting paper, which talks about Timbre and Spatial correlation, which I need to plan for future because some graph reading is needed for that which I will talk about in the 3rd Article of “Measurements”.

As of now, let’s understand that our brain is constantly adjusting to the environment that is covering us. Let’s take an example, suppose your wife/partner is calling you from Bedroom, Bathroom, Hall, Empty Street, Among a Crowd. Your partner will always sound the same, no matter how constructive interference their voice gets (due to reflections), no matter how much hollow they sound. They will sound like themselves everywhere. And your brain does the work to separate the voice from the space automatically. (Again, for pathways mentioned above).

So, everything needs time, and it is always possible to get familiar with some new signature easily. You just must be a bit open minded and adventurous enough.

YOUR EYES ARE LYING

To add to this whole thing, your brain hears what you see, what you feel in your hand. It might sometimes overlap the judgement of your auditory system in ways that you can never truly distinguish between a bad or a good sound.

You believe your eyes, more than your ears (sometimes)

In 1994, Mr. Sean Olive and Floyd Toole, debunked the myth that listeners can ignore visual cues.

Forty listeners participated in identical tests, one blind and one sighted.

The results were shocking. The inexpensive speakers dropped significantly in ratings when revealed to the listeners. Despite having the exact same sound, they evaluated it earlier. Which makes high-resolution music not less than wine industry where, there is a huge correlation between price and label, yet not so in taste b/w different parts of the world.

(Don’t get too carried away by the 1994 Year, matter of fact. Thomas Edison once in 1901 claimed that there is no colour in Phonograph. And later he tested with real people in a Concert Hall to prove his hypothesis. Although there were a lot of caveats to this data, but it is important to understand that colourations / transparency are not new concepts, people have been working for these advancements for a long time ago.)

So, you must understand that if your brain wants to hear a difference in sound (for any reason, like you spend money or apparel), it will definitely hear the same frequency as a different one. Because at core, we are human, and we use all of our sensory inputs to stay relevant in a world where the only constant is change.

REVIEWER WORSHIPPING

In modern world I am sensing a far more dangerous bias that shouldn’t be there at the first place. I call it “Reviewer Worshipping”. People often take reviewers a bit too seriously that, what they refer as good, people also accept it as good. Now this has left us with confusion and ignorance but nothing else. We fail to understand that most reviewers are just as human as us and they don’t have magical ears. They can compare products better than us. Yes, few of them can hear more frequency range than you but that doesn’t make a person better judge than anyone who can hear only up to 15Khz.

NEUTRAL TARGET PANDEMIC

Well, this all would’ve been easily ignored if the review community never released their so called “Neutral Target” as a comparison to IEMs. People often misunderstand that, we hear differently and more over than that, how the reviewer made the target is questionable (in many ways possible). More often than not, if you put most neutral targets through normalisation, you can see very well-defined deviation from what the average human hears. So stop believing that random Neutral Targets are Neutral for your ears too, let alone preference.

You are not gullible, yet at the same time, your brain will do everything in its power to justify what you shouldn’t, and your brain is basically an illusion within yourself.

LEVEL 4: CONSUMER GEARS

Suppose you are a mixing engineer, and your producers gives this data.

X % of the population listens to 90% of their music while driving. Y % of the population listens to 80% music while cooking / gymming. Z % of the population listens to music while they are doing paperwork. What would you do? How can you cater to all these people by using just one track?

The Answer is simple, make tracks as neutral as possible, Audio Equipment makers will tune their equipment in a specific way to cater that audience. There are also some intelligent systems that can adapt to the ambient sound and make adjustments to the audio output according to that. Like, some luxury cars have adaptive EQ, where they increase the bass and treble to fight through the noise.

But here’s is the kicker, when some producers make music for the road / for earphones (as everything is suffering from the circle of confusion), they want to add spice to the mix (their own sense of colour). So, what they do is, instead of maintaining a good balance they Compress the dynamic range to make sounds louder, they pump up the bass and treble to make sound exciting. Result?

Whenever you listen to these tracks at a good maintained acoustic environment, you will get a lot of harshness. Although the road and the muddy bass in earphones helped us to mask a lot of the imperfections and inaccuracies in the mix, when listening indoor, the mixes will feel sandpaper rubbing in your ears. I just discussed the car, now imagine it with Bluetooth speakers and gyms.

Some tracks that are meant to be played on car won’t sound good on Hi-Fi System, some tracks that are meant to be played on phone speakers won’t sound good on headphones. Some tracks which are not recorded binaurally will not sound good on headphones. At the end we should just stop telling us that it’s the gear, it’s the music itself sometimes.

LEVEL 5: NEW AGE MUSIC ACCESS

Before starting this topic, let’s understand an audio concept called Dynamic Range (Not to be confused with dynamics which is very subjective and means different to different people). Dynamic Range Simply means, the difference between the quietest sound and the loudest sound in the signal.

But our brains are not so productive that they can sense each and every variance of signal. So, what it does it, it takes an amount of time, then it takes the signal amplitude, averages it, and interprets how loud the music is.

It’s like if there is a series of number 1,2,3 and 4,5,6, our brain can’t read 1,2,3/4,5,6. It will read ((1+2+3)/3) = 2 & ((4+5+6)/3) = 5. So, 1,2,3 becomes 2 and 4,5,6 becomes 5. This is a very simplified version of what happens in audio signal. Now signal doesn’t work this simple, and this is just an example. Real signals use Root Mean Square, to average a signal, like sound. What we need is to understand, we can’t listen to peaks/dips in a signal (*not talking about Frequency Response here), we only understand the average value in a span of time.

Now comes the best (worst) part, Dynamic Range Compression or DRC.

You might’ve heard that modern music is fighting the Loudness War. The simple aim is, if it’s played somewhere, the music should sound the loudest. Why? typical attention-grabbing syndrome? I can’t answer that since I am not involved in the music industry and I haven’t talked to any producer regarding this topic. But it’s accepted worldwide that most producers make music louder, so the lows and highs sound, “better” (excessive).

So, what they do is simple, they chop of the peaks and cuts the lows. It makes the average sound higher when boosted in dBFS scale without clipping (I will talk about dB scales later in the series). But it takes away a lot of things also from the music. (I am not talking about lossy vs lossless here, that I will talk later)

The music sounds lifeless; each instruments sounds like they are recorded with equal importance. The dynamics (audiophile subjective term) becomes a lot flatter and dry. The sound feels like it’s in the head and it’s coming from a wall.

Dynamic Range Compression is essentially a loudness cheat code. It exploits the fact that your brain measures loudness by averaging energy over time, not by peak levels. Producers use it because in the attention economy, the loudest song in the playlist gets noticed first. But there's a cost - too much compression kills the dynamic life that makes music emotionally engaging. And remember, DRC can get applied to every instrument, and on top of that on every track when mastering engineers supply them for Spotify and other lossy media.

But there is still hope since the loudness war is decreasing day by day and we are getting more dynamic music. (Mainstream media is still not there to make a change but independent creators are taking DRC seriously).

NOTHING ELSE MATTERS, OTHER THAN DRC

The one example always makes me laugh is Metallica. Around 2005 they hired a mixing engineer who compressed their songs so highly that people found it difficult to consume. But later, when Guitar Hero (a game where you can play guitar with the music) added their uncompressed song to the game, people went mad. They asked for rerelease and later Metallica might’ve fixed that.

So, accept it as soon as possible that most of the current music is made to be played on small speakers/boom boxes/car speakers, to sound loud. So, switching between ears of music is not less that switching between planets. For example, take Pink Floyd, the dynamics in “The Dark Side Of The Moon” is almost on par with good Orchestral Music, even to this date.

LET’S FIX IT

There are so many deeper levels of problems, that I can go all day which affects you as a listener. Cross-feed Paradox, lack of spatial-awareness in modern audio, audio codecs and their missuse, the list will go on. But what can we do to make us safe from all this?

-

Learn what neutral is – I know neutral is boring, you need music for fun but get your fundamentals and expectations right first. Pick well rewarded headphones to understand what the standard is. You might like it, you might not. But the process of you being a neutral listener is going to be very effective, and if you have a strong sense of what is neutral you can judge other things.

Now, don’t run to Measurement websites and buy whatever matches you favourite reviewer’s neutral is. I will talk about this in my 3rd Episode (if my maths is correct). Until then, listen to different kinds of neutral and make a base.

-

Stop chasing expensive gears – Audio Gears are complex systems that depict a multivariate equation consisting of trend, economy and advertisement. In my honest opinion there is no reason to get anything above $500 if you are chasing a good sounding IEM in general.

The higher you go, you must choose an IEM that caters to your specific taste but that’s a different thing altogether, as no amount of money can justify your liking for a Hi-Fi system that is tuned for your taste.

I would’ve spent $2500 to acquire ZMF Atrium Open if I had the money, but to understand sound and you don’t need uber expensive gears anyhow. One headphone/IEM might sound good to some songs, and some songs in the same genre might prefer another. And remember, there is no correlation between price to tonality. (I wanted to present paper every time I say a contradictory statement, but I forgot which paper it was). And most probably, it’s not the headphone itself, it might be the track, and you’re fixing a flaw with a flaw.

-

Match gear to your context – You can see a lot of different opinions about newly launched headphones in the market. How their tonality is so bad in graphs. But we must remember that companies like Apple & Sony hold so much research power that they can outdone anything in the world of audio without. Bluetooth headphones might be well suited for noisy commute, but not for studio. Neutral earphone is suited for indoor use and not for gyms. Eliminating the extremes also leaves us with quite a bit of choice, who knows? Try and find out what works best for you as you know where to look for from now on.

-

EQ – As simple as it goes, EQ gears. And no, don’t go to squig and adjust based on graph, learn where you need the correction and fix that only. Give it a little downwards slope, a bit upwards tilt, who knows it can save you real life money. And don’t believe anyone without experiencing for yourself. You are underestimating a great combination of your personal HRTF + Taste.

- Challenge your preferences – Although it is very important to chase your signature, but it is also important to understand accuracy. If you don’t have a base point, you will be gullible to trick. Do blind taste, ask yourself – is it boring or is it accurate / natural. No one can listen for you, and you must do that for yourself. Your HRTF + Preference is unique, and chances are you won’t meet another person in the world who has exact combination as you.

WHAT WE KNOW, WHAT HAVE WE LEARNED?

WHAT WE KNOW, WHAT HAVE WE LEARNED?

There's no single definition of perfect sound. But there is such a thing as informed listening. When you understand what shapes what you hear, from flawed studios to your own biases, you stop being a passive listener. You become an active interpreter. And in a world full of flawed feedback loops and conflicting references, that awareness is your greatest tool.

The Circle of Confusion is real. Nobody has a universal reference for "correct" sound. Studio flaws, monitor colorations, and room acoustics all shape the music you hear. Headphones can reveal or distort mixes depending on how they're tuned. Your best defence? Train your ears, learn what neutral sounds like, and EQ with intention not impulse.

TLDR: IT’S NOT THAT DEEP

There is a song that gives you goosebumps every single time. The one that takes you back to that perfect moment, maybe driving with friends at after your breakup, maybe alone at 3 AM when nothing made sense in life in your college hostel? That emotional connection you feel. It has NOTHING to do with perfect frequency response.

The Circle of Confusion that drives engineers crazy? It's also the reason why your dad's old car speaker can make him cry with the right song. Why your cheap earbuds from college can still give you chills. Why that terrible Bluetooth speaker at the beach party sounded like the most fun thing in the universe.

Because here's what nobody tells you:

Music isn't about achieving perfect playback. It never was. It's about the moment when sound becomes memory, when frequencies become feelings. Think about it. The Beatles recorded on 4 track machines that would make today's bedroom producers laugh. Bob Marley's vocals were captured on mics that we'd call it "vintage" today. Your parents fell in love to songs played on FM radio, literally mono, band-limited, compressed to hell. And yet, those songs moved the world.

Here's what I realized after years of chasing perfect sound, every piece of gear you own from your car's boomy system to your analytical headphones, is just a different window into the same song. Some windows are clear, some are tinted, some are stained glass. But they're all showing you something real.

The tragedy isn't that we don't have perfect playback. The tragedy is when we stop hearing the music because we're too busy listening to the equipment. I’ve seen audiophiles with thousand-dollar systems who haven't enjoyed an album in years. They're not listening to music anymore they're listening to their tweeters. Meanwhile, someone's having a religious experience with a 50-dollar Bluetooth speaker because they're HEARING the song.

Yes, learn about audio. Yes, train your ears. Yes, understand the science. But NEVER let the pursuit of perfect sound kill your ability to just LISTEN.

Written By - Argha

YouTube - https://www.youtube.com/@audiowithargha

Discord - https://discord.gg/wCcusAAHfw

Credits

Peer Review

Audio Engineering Society, India. [ https://www.facebook.com/aesindia.org ]

Mr. Stan Alvares (Hon. Secretary)

Research & Guidance

- Sean Olive

- Floyd Toole

- David Miles Huber

- Emiliano Caballero

- Robert Runstein

- Agnieszka Roginska

- Paul Geluso